AWS Notes – AWS Storage and Database Architecture Best Practices

Originally published on 11/14/2013AWS Enterprise Solutions Architect Siva Raghupathy started by stating that 2.7 zettabytes (ZB) of data exists in the digital universe today. There will be 450 billion transactions per day by 2020. Most data is unstructured text.

How should we be handling all this data? It is about finding the right tool for the job. He broke down the AWS services into different categories based on the types of problems being solved.

There are primitive compute and storage options, kind of like a hard disk, that add flexibility because you can host any major data storage technology but come with operational burdens.

Next there are managed AWS services, for complex vs. simple queries and structured vs. unstructured data. He included blob stores like S3 and Glacier where you are storing unstructured data that isn’t attached to any query.

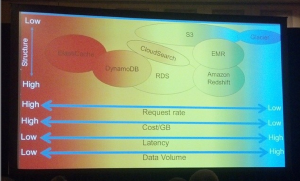

He often asks his customers the question, “What is the temperature of your data?” Hot data is smaller, with low latency and a very high request rate. Cold data is vast, mostly static and infrequently requested. Warm data is somewhere in between. He then mapped the various AWS storage services, from hot to cold.

He often asks his customers the question, “What is the temperature of your data?” Hot data is smaller, with low latency and a very high request rate. Cold data is vast, mostly static and infrequently requested. Warm data is somewhere in between. He then mapped the various AWS storage services, from hot to cold. He spoke about cost conscious design, and then demonstrated the concept with an example. He fired up the AWS simple monthly calculator to figure out the correct AWS data storage service to use based on the cost. In his example, one would first think S3 was the appropriate solution, but after running it through the calculator we saw that because of all the small objects, DynamoDB was a better solution at less than 10% of the cost. You can use the AWS calculator to validate your architecture design. The best design is the one that will cost the least.

You can get further savings by moving data from one store to another as it cools down.

Next he moved on to talking about the AWS database services, starting with RDS. He said to use it for transactions and complex queries, but not for massive numbers of read/writes or simple queries that can be better handled by NoSQL. Furthermore, it is necessary to pick the right RDS DB instance class.

When to use DynamoDB? He said pretty much whenever you can. The only times you wouldn’t use it is for complex queries and transactions or for cold data. For DynamoDB best practices, keep item size small, store large blobs in S3 with metadata in DynamoDB and use a hash key for extremely high scale.

Last, he spoke about ElastiCache for speeding up reads/writes by caching frequent queries. Redis in particular is quite popular, but noted that it is not a good option for when data persistence is important.

He quickly wrapped things up going over the AWS unstructured data text search tool CloudSearch(don’t use as a replacement for a database), Redshift data warehouse service for complex queries on large quantities of historical data (copy large data sets from S3 or DynamoDB) and MapReduce (the “swiss army knife” for parallel scans of huge datasets).